Customer Service x Large Language Models

By Tara Stokes

Our Perspective

In Generative AI: Context Is All You Need, we shared our excitement for the application of large language models (LLMs) like GPT-3 for use cases across the enterprise. When evaluating different business workflows that would benefit from LLMs’ retrieval and synthesis capabilities, customer service stands out to us as a particularly great fit.

Today, customer service teams operate in information siloes, deal with spikes in request volumes, and struggle to hire and retain talent. These characteristics set the stage for new, context-aware search and generation tools that make it possible to scale high-quality, in-house customer service in ways that were not previously possible.

Companies using LLMs may:

- Make customer service agents more productive through AI copilots.

- Enable intelligent, automated resolution of customer service requests.

- Build omni-channel and/or multi-lingual product offerings.

- Sell to businesses that purchase this technology for their in-house customer service teams.

Why is now the right time to apply LLMs to customer service?

LLMs, also referred to as foundational models, are spurring renewed enthusiasm for applying AI to enterprise use cases by lowering the costs of training and making state-of-the-art machine learning more accessible.

In the past, models had to be built and trained specifically for a use case making it expensive to deploy and maintain models in production. Leveraging a new neural network structure called transformers and using vast training data sets, LLMs have demonstrated the ability to handle common enterprise tasks like information retrieval and text generation out-of-the-box without explicit task-specific training. The industry refers to this as few-shot or zero-shot learning. Few-shot / zero-shot learning capabilities reduce training costs by reducing the size of training data corpus and computation necessary to deploy state-of-the-art machine learning models. LLMs can be further fine-tuned or fit to domain-specific use cases (e.g., healthcare insurance summarization) with smaller datasets.

We believe LLMs could allow in-house customer service teams to achieve up to 10x productivity gains through a mix of intelligent automation and AI-augmented productivity tools for agents.

What are shortcoming of LLMs?

- LLMs are still costly and computationally intensive to train, fine tune and host.

- Limitations on the amount of input they can consume.

- A risk of “hallucinating” or producing output that is false.

The Market and Opportunity

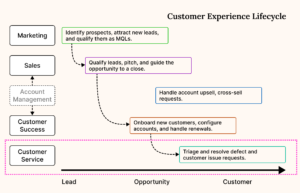

Customer experience encompasses all touch points a customer has with a business – before, during, and after a sale. These touch points include:

While all stages of the customer experience lifecycle are interesting to Point72 Ventures, here we will focus on the opportunities in the Customer Service segment.

What did we observe in network conversations and market research?

We believe that customer service presents a large market opportunity…

- Changing consumer preferences increase the complexity of customer service workflows.

- Customers today are digitally savvy and demand better customer service. [Zendesk, 2022]

- Businesses desire solutions, especially as the perception of customer service shifts from cost center to organic growth center.

- Customer service headcount has traditionally scaled linearly with the number of customers; and yet, businesses are struggling to hire and retain agent talent. [Harvard Business Review, 2021]

- Worsening the issue, the volume of customer service requests is only increasing.

…for solutions addressing the pain points related to these manual, rote tasks.

- Request volume is volatile and unpredictable.

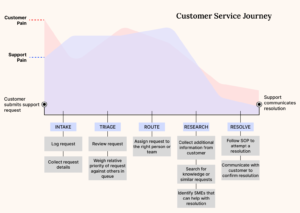

- Issue resolution is a fragmented, convoluted process.

- Software that improves agent productivity improves the agent experience and reduces agent churn.

What’s the opportunity?

We’ve been busy to date, investing in companies that have the potential to lower costs by automating simple tasks or augmenting workflows that require human review.

- [Automate] Superhuman conversational AI agents like PolyAI and Tenyx allow businesses to reduce the cost of staffing customer service agents around the clock and handle simple requests from intake to resolution.

- PolyAI works with FedEx to autonomously assist customers that call to check on the status of their package, select preferred delivery timeslots, or even reschedule their delivery date. The company also works with restaurants to handle customers that call to book a table, change an existing reservation, or even cancel a reservation entirely.

- [Augment] Agent augmentation solutions like Tomato allow businesses to tap into cheaper offshore workforces.

- Tomato is building a technology that aims to make agents more intelligible in real-time.

- [Augment] Agent augmentation solutions like Unbabel can help businesses do more with the current workforce they have.

- Unbabel’s real-time language translation technology can allow agents to understand and communicate with customers from around the globe through chat.

We believe there are additional opportunities around AI solutions that can create improvements in the research and resolution process.

- [Automate] Large, pre-trained models may allow businesses to leverage conversational AI agents that can handle more complex issues from intake to resolution.

- [Augment] AI-augmented tools for agents could equip support teams with technology that will help them resolve complex issues faster.

Technology

Why do we believe LLMs are important?

- Customer service operations produce rich sets of training data that can be used to configure LLMs.

- LLMs are particularly applicable to the customer service space due to the corpus of domain-specific, text-based training data that arises from customer service requests, tickets, and transcripts of calls.

- This data can be used to train or fine tune LLMs in order to alleviate the workload on customer service agents and enhance the customer experience.

- These LLMs provide the underlying architecture for virtual agents; thereby enhancing the intelligibility of human agents and improving existing workflows, such as routing and analytics, by parsing through customer service tickets and providing customer insights.

- LLMs have powerful retrieval and generative capabilities.

- They can quickly search through more text than any human, which could allow LLM-based conversational AI to respond more effectively to customers during more complex conversations than previous rules-based bots.

- LLM-augmented agent tools could anticipate, synthesize, and deliver the right answers and context to agents for each task. This would eliminate the need for the agent to open up a bunch of tabs and software tools to go fishing for information.

- We’ve observed that LLMs perform well on generating meaningful, logical content in medium form lengths. Most customer service interactions require only the generation of short form text (a few sentences), so they are well positioned to generate text that is coherent and relevant.

- LLMs are able to handle many tasks with few-shot learning capabilities.

- This means that they can quickly generalize to unseen text and issues, and generate compelling responses, with just a few examples. LLMs are thus particularly well suited for customer service, where there is a long tail of potential customer requests that are impossible to plan for up front.

- For example, LLM-based conversational AI could handle many more task edge cases without needing to be explicitly trained using finely-labelled and curated training data sets.

- LLM-augmented agent tools could also detect many more edge cases in how a customer might describe an issue without being explicitly trained to recognize those cases.

- LLMs require less configuration effort from customer service teams, meaning there is a faster anticipated overall time to value.

- First-generation chatbots required customer success teams to manually define rules and outcomes (e.g., “if customer inputs X, output Y”), a significant up-front configuration effort for limited triaging capabilities.

- LLMs are pre-trained, and can be fine-tuned on company specific data. While this process requires implementation time and costs, it is automated and the model cancould be deployed immediately thereafter, reducing the time to value.

Conclusion

The proliferation of department-specific software created SaaS sprawl and data silos.

- Okta’s 2022 annual report estimated that organizations use about 89 applications on average. Larger organizations (2,000+ employees) may have over 180. [Okta, 2022]

- Marketing, sales, and customer success teams have their own systems, creating tool sprawl and data silos.

Data integration and pipeline solutions enable organizations to centralize and analyze data from across disparate systems.

- SaaS platforms increasingly offer API / database replica access to allow developers to access and centralize information across systems.

- Businesses have started to develop ecosystems of integrations that allow insights from one system to enrich another.

- For example, businesses have started to leverage pre-built or custom integrations to be able to hydrate customer engagement systems with information from other tools (e.g., surfacing Salesforce data in Zendesk).

LLMs will take advantage of data centralization and more robust API pathways to integrate ML more intelligently into end user workflows.

- Coupling LLMs’ few-shot, zero-shot capabilities with vast, centralized enterprise datasets could enable machine learning models to handle many downstream tasks without explicit training.

- We believe businesses will be able to deploy LLM byproducts to customer-facing interfaces (e.g., issue management platforms, chat) more easily as enterprise API infrastructure continues to mature – i.e., more flexible API design, troubleshooting tools, monitoring.

We expect in-house teams (businesses) to capture more value from LLMs than outsourced service providers.

- Businesses can reduce reliance on outsourced solutions by using LLMs to automate less complex customer requests and equip agents with AI to resolve more complex issues faster.

- Businesses leverage outsourced solutions to offload workload, tap into cheaper labor pools, or expand into new geographic regions.

- While outsourced solutions allow businesses to tap into cheaper labor pools and expand coverage into new regions, external solution providers lack the data access and context necessary to provide holistic customer service experiences.

- Outsourced solutions like contact centers also have per-hour business models that reduce the value of increased productivity.

Please reach out if you are…

- Forming your own perspective on the LLM space

- Building the next generation of LLM-enabled tools to assist in research and problem resolution

- Looking to join an exciting early-stage startup working on LLMs

- Searching for customer service solutions that leverage LLMs